Tools for extracting information from data

Generative AI has expanded, but not replaced, traditional tools for extracting insights from data

Published by Luigi Bidoia. .

Strumenti Management Analysis tools and methodologiesData and information are two very similar concepts, but, in the field of information management, they have very distinct meanings:

- data are raw, unprocessed numbers, texts, images that on their own have no immediate meaning or lack context. For example, a series of numbers such as 10, 20, 30 are given. In the absence of additional context (or metadata to complement them), these numbers by themselves provide no useful information;

- Information is data that has been processed or organized in a way that adds value or context, making it useful and meaningful for specific decision-making processes. For example, if those numbers (10, 20, 30) represent temporal recordings of the market prices of a commodity, they become useful information to understand that that commodity has become scarce.

If data is, in a certain sense, the new oil, without refined analysis and interpretation processes that extract information from it, it loses its potential richness.

In this context, it is difficult to underestimate the value of the processes of extracting information from data[1].

The role of large language models

In recent years, artificial intelligence has made notable advances, transforming itself into a crucial tool for analyzing and extracting information from massive volumes of data.

In 2023, Generative AI via ChatGPT marked an era, offering users unprecedented access to a huge amount of information via simple text queries. Its main strength lies in the ability to interact in an intuitive and contextualized way, facilitating a direct and personalized user experience. However, the breadth of knowledge areas potentially covered may impact the accuracy of responses. To overcome this limitation, new specialized tools are being developed capable of providing accurate and detailed dialogues in specific areas, significantly improving the relevance and quality of the information provided.

However, generative artificial intelligence demonstrates exceptional performance when, as ChatGPT does, it processes textual data, taking advantage of the ability of Large Language Models (LLM) to understand and generate natural language in a coherent and contextualized. However, when dealing with predominantly numeric or highly technical data, the results tend to be less meaningful.

The role of other tools

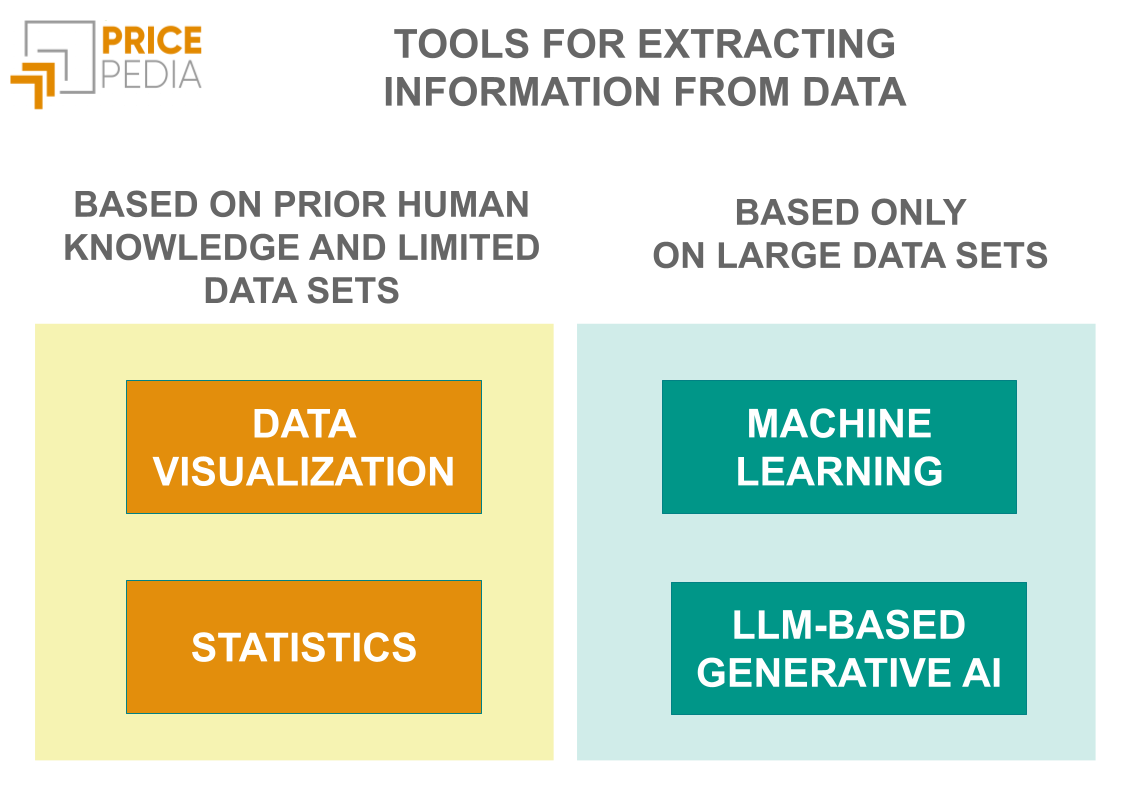

When dealing with predominantly numerical data, consolidated and well-established techniques, such as data visualization, statistics and machine learning prove to be much more efficient tools than large generative models.

Data visualization

"Data visualization" is the set of graphic visualization and data table techniques used to represent, analyze and communicate information and data. This field, based on the knowledge of the user, combines elements of graphic design, statistics, and data science to transform complex, unstructured data into clear, intuitive visual formats, allowing users to better understand trends, patterns, and anomalies. "Data visualization" includes a range of tools and techniques ranging from charts (such as histograms, line graphs, bar graphs, pie charts, scatter plots, etc.) to maps, up to data tables, each with its own use cases and contexts in which it is most effective. Graphical visualizations are particularly powerful for highlighting data relationships and dynamic patterns; data tables, on the other hand, offer detail and precision, essential for in-depth analysis.

Statistics

Statistics deals with the collection, analysis, interpretation and presentation of data. By applying rigorous quantitative methods, statistics provides tools and techniques for understanding and interpreting relationships within data, allowing users to test hypotheses with precision.

Statistics is not simply limited to extracting information from data, but begins with the formulation of hypotheses based on prior knowledge or existing theories. The data is then used as a tool to test the validity of these hypotheses. For example, a researcher might hypothesize that there is a causal relationship between exercise and cardiovascular health. Statistics serves to verify whether this hypothesis, derived from medical science, is confirmed or not by the data and therefore can become reliable information, useful for making informed decisions.

Machine Learning

Machine learning is a branch of artificial intelligence that allows you to extract useful information from large data sets. One of the capabilities of machine learning involves identifying complex, non-obvious patterns in data that may not be immediately obvious or detectable even through traditional statistical methods. These patterns may include correlations, trends, clusters, or anomalies that are not easily discernible due to the large size or complexity of the datasets.

Once identified, these patterns can be used to make predictions about new data. For example, after being trained on historical data about user behavior on a website, a model could predict which users are most likely to make a purchase in the future. This ability to predict makes machine learning extremely useful in a variety of fields, including finance, healthcare, and marketing.

In addition to making specific predictions, machine learning models can also identify broader trends in data. For example, a model might reveal a positive correlation between purchases of diapers and purchases of beer[2] in a supermarket, suggesting a relationship that might not otherwise be apparent.

Conclusions

With the advent of generative artificial intelligence based on Large Language Models, the toolbox for extracting information from data has been significantly enriched, introducing advanced natural language analysis and interpretation capabilities. This innovation, however, does not make traditional methods such as data visualization and statistics obsolete, nor does it minimize the importance of machine learning, which remain fundamental tools in data analysis. While Large Language Models offer extraordinary text processing capabilities, machine learning provides a broad spectrum of applications, from classification to prediction, essential for deciphering complex patterns in data. Both of these new tools complement traditional methods, which continue to be valuable for their ability to visually illustrate data and conduct rigorous quantitative analysis. In particular, machine learning is crucial when it comes to identifying non-obvious trends, predicting future events and optimizing processes based on large data sets, where the computational power and algorithmic approach can offer distinctive advantages. In this technological ecosystem, traditional methods, machine learning and generative artificial intelligence coexist as complementary tools, each with its specific role depending on the context and analysis objectives, ensuring an approach to information extraction that is both robust and versatile.

[1] It may be useful to point out that the "process of extracting information from data" refers to a broader scope of activity than the process of "Information Extraction" (IE). The latter is a research field in artificial intelligence and natural language processing that deals with the automatic extraction of structured and relevant information from unstructured texts. The goal is to automatically identify and classify specific information in written texts, such as names of people, organizations, locations, dates, relationships between entities, etc., and to organize this information in an easily accessible and manageable format, such as databases or spreadsheets calculation.

[2] The story linking the purchase of diapers and beer is a rather famous anecdote in the field of data mining and business intelligence. The anecdote claims that, through analyzing customer transactions, a supermarket discovered a correlation between the purchase of diapers and beer. This story is often used as a simplified example to illustrate the concept of association in data mining which may seem counterintuitive but is revealed by data analysis, providing the analyst with an unexpected insight.