Tokens & Transformers: the heart of modern Machine Learning models

How unified technology has accelerated the evolution of AI

Published by Luigi Bidoia. .

Strumenti Machine learning and EconometricsThe Variety of Inputs and the Historical Fragmentation of AI

For decades, automatic—or “intelligent”—information processing developed along separate paths, often isolated from one another. Depending on the type of data to be analyzed or the kind of problem to be solved, distinct areas of study emerged:

- Human language: text interpretation and dialogue;

- Vision: recognizing objects and categories from images;

- Audio: distinguishing sounds, speech, and music;

- Time series: forecasting values over time;

- Decision-making and control: games (checkers, chess, Go), agents, and reinforcement learning.

Each type of input or problem gave rise to its own research area, with specialized approaches, tools, and models. This fragmentation, natural in the early stages of AI, had an evident drawback: it prevented the exploitation of synergies, slowed down the transfer of ideas across fields, and made it difficult to integrate multiple forms of information.

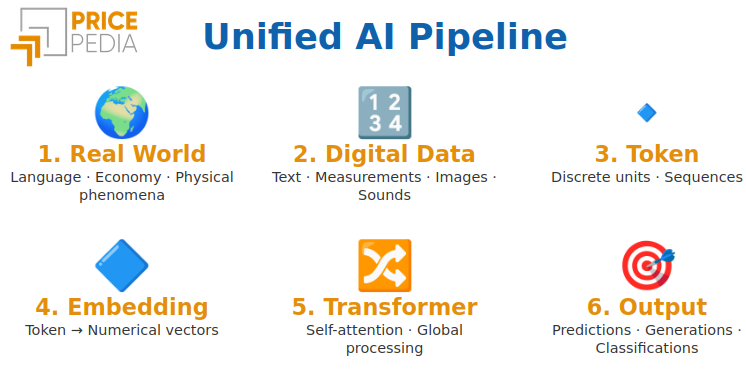

A major breakthrough occurred when it became clear that there was a way to represent any type of input—text, images, audio, temporal data—in a common format, allowing a single architecture to process them all in the same way. The core of this revolution is the token[1].

The Token as a Technical Unit of Representation

To process very different types of data — text, images, audio, time series — modern artificial intelligence relies on a simple principle: instead of handling the entire dataset in its original complexity, it first breaks it down into sequences and then into elementary units of meaning. These units are called tokens.

A token is a discrete part of the data:

- a word or subword fragment in text,

- a small patch in an image,

- a temporal window in audio,

- a single measurement of an economic indicator.

Regardless of the original type of information, every input can be broken into these minimal elements and then arranged in a precise order, forming a sequence. Order is essential: it changes the meaning of a sentence, the structure of an image, and the evolution of a phenomenon over time.

To be processed by a model, each token is then converted into a vector of numbers through an operation called embedding, producing an embedded token. This vector representation of tokens, organized within the sequence, is the actual unit on which modern models compute relations, dependencies, and statistical patterns. This transformation — from raw data to a sequence of embedded tokens — is what makes it possible to use a single model architecture, such as the Transformer, to handle information of very different kinds.

Do you want to stay up-to-date on commodity market trends?

Sign up for PricePedia newsletter: it's free!

The Transformer: global sequence processing

Once a complex datum has been transformed into a sequence of embedded tokens — that is, an ordered list of numerical vectors — the architecture that revolutionized modern artificial intelligence comes into play: the Transformer.

Its defining feature is the ability to avoid processing tokens one at a time, as earlier architectures did. Instead, it analyzes the entire sequence in a global and simultaneous manner, considering all elements together.

For each token in the sequence, the model evaluates which other tokens carry relevant information and how much weight they should have.

This process is known as self-attention: a mechanism that allows every embedded token to “look at” all the others, dynamically assessing their mutual importance.

In this way, the Transformer captures both local and long-range relationships, even between elements that are far apart in the sequence.

From a computational perspective, Transformers are designed to parallelize computations across all tokens, efficiently leveraging modern hardware architectures such as GPUs and TPUs.

This makes them significantly more powerful and scalable than traditional neural networks.

The learning process

We have already noted that one of the essential functions of a Transformer is to learn the internal relationships within a sequence, that is, how the different embedded tokens interact with one another. In other words, the model discovers which structures, patterns, or configurations tend to appear repeatedly inside a sequence.

But the Transformer goes further: it does not limit itself to identifying patterns within a single sequence.

It also learns the regularities that appear across many different sequences. By observing thousands

or millions of examples, the model builds a statistical map of what tends to recur, what tends to follow what, and

how certain shapes in the data evolve into others.

Thanks to this learned knowledge, a Transformer can predict the next tokens,

generate new sequences consistent with those it has seen, or classify a sequence

based on the patterns it contains.

In the case of tokens derived from time-based measurements, this learning concerns both local patterns (slope changes, oscillations, reversals, spikes) and long-term dependencies (trends, seasonality, periodic rhythms). If the measurements involve multiple variables, the model also learns the relationships between variables: whether a change in one variable tends to be followed, after n steps, by a change in another, in which direction, and with what intensity.

In Summary

The decomposition of data into elementary tokens, their transformation into meaningful numerical vectors

(embedded tokens), their organization into ordered sequences, and the development of a neural architecture —

the Transformer — capable of processing these sequences with exceptional efficiency have helped

bring together areas of artificial intelligence that once evolved independently.

Thanks to this unified representation, progress achieved in one domain has rapidly become useful in the others.

The major advances made over the past decade in language processing have paved the way for similarly significant

developments in computer vision and, more recently, in time-series forecasting. As discussed in the article

The emergence of fundamental models in time series forecasting,

the large models originally centered on language have been joined by equally powerful models in the area of visual

recognition and, today, in the modeling and prediction of numerical data.

Note

- Historical note on the concept of the “token”. The term originated in computational linguistics as early as the 1960s, referring to the elementary unit obtained by splitting text. In the 2010s, with distributional models such as Word2Vec and GloVe, tokens were transformed into continuous numerical vectors for the first time. With techniques such as Byte Pair Encoding (BPE) and SentencePiece, the token became a flexible, largely language-independent unit. The decisive step toward multimodal unification came with the Vision Transformer (2020), which treated image patches as tokens analogous to those used in text. Since then, tokens have served as a universal representation capable of describing text, images, audio, and structured data uniformly.